AEM Image Metadata Generated by AI

Jorge Chércoles, April 6, 2023

We have enjoyed integrating Adobe Experience Manager (AEM) with AI tools. So far, our Director of Technology Clemente Pereyra have talked about the potential for using AI with AEM and showed off our prototype for creating metadata and I shared a proof of concept I created to generate Tweets for written content.

If you’re using AEM for your website, you’re probably also using AEM Assets for your digital asset management (DAM). AI has great potential there too.

Why would I use AI with AEM Assets?

An important part of a site’s search engine optimization (SEO) is impacted by a few factors including key metadata such as alt-text attributed to images. Additionally, in order to provide the best user experience for site visitors who may be visually impaired for example, having a good description for each image is critical.

In addition to its importance to SEO, some image metadata is very useful for using the DAM itself and finding related images. Other metadata like tags can help create image collections for finding related items. The more images or files in the DAM, the more important this becomes for organizational purposes.

Writing metadata of any sort for images can be time-consuming. In the case of tags, it is imperative to be consistent in their usage. Adobe Sensei is an AI feature of AEM which can power smart tagging. But, AI can be used for writing other metadata too and in this case, we’ll use it to write an image description.

Adding an image description in AEM Assets using AI

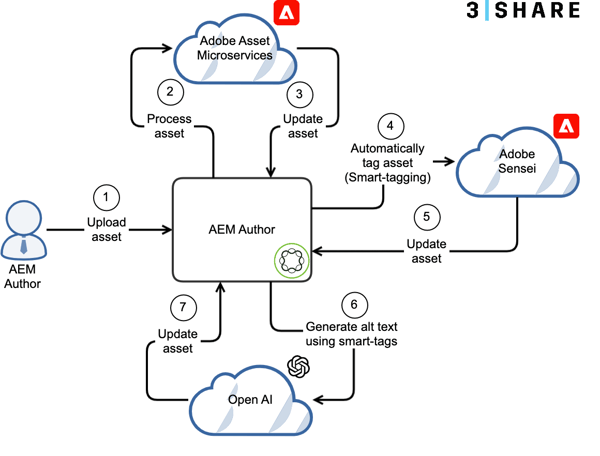

We have integrated AEM with both Adobe Sensei and Open AI to leverage each platform's potential.

Everything starts immediately after an image is uploaded into AEM DAM. By configuring the Assets Cloud Post-Processing workflow, we are processing every image that gets uploaded to the AEM DAM. For each image, Adobe Sensei performs the analysis and assigns the corresponding smart tags. After this step, we use the Open AI GPT model to create an Alt Text based on the generated smart tags and store it in the image's metadata as its description.

It's worth noting that there are other ways to do this. In this case, as we use the smart tags that were already generated to create the alt text (instead of processing the image itself), we ensure consistency among the image's metadata and also the cost is significantly cheaper.

The architecture of this implementation is seen in the image below.

Conclusion

Are you ready to incorporate AI into your AEM instance? Or maybe there is another feature you want to add or use case you need to address. 3|SHARE would love to help. We’d also love to know what you’re doing with AI so far. Contact us here.

Jorge Chércoles

Jorge Chércoles is an AEM Backend Developer at 3|SHARE. His passion is coding whether that is at work or outside of work. He loves that it gives him the ability to create things out of nothing. At 3|SHARE, Jorge enjoys the work environment and independence he has while doing his job. He is an Adobe Certified Expert having earned the Adobe Experience Manager Sites Developer certification.